Implementing Retrieval-Augmented Generation (RAG) systems is inherently complex, requiring deep understanding of data, use cases, and intricate design decisions. Additionally, evaluating these systems presents significant challenges, necessitating assessment of both retrieval accuracy and generative quality through a multi-faceted approach.

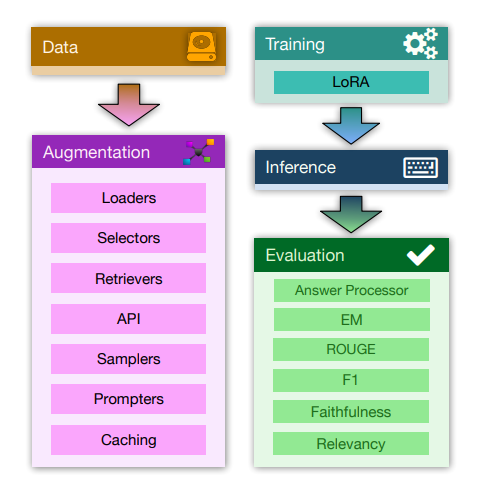

RAG FOUNDRY, an open-source framework for augmenting large language models for RAG use cases. RAG FOUNDRY integrates data creation, training, inference and evaluation into a single workflow, facilitating the creation of data-augmented datasets for training and evaluating large language models in RAG settings.

The RAG Foundry framework is designed for rapid prototyping and experimentation and predomintly consist of following four main modules: 1. Dataset Creation: Manages the creation and processing of datasets for RAG training and inference, including data normalization, aggregation, retrieval, API integration, and prompt creation. Data is saved in a consistent format. 2. Training: Utilizes Parameter-Efficient Fine-Tuning (PEFT) and Transfer Reinforcement Learning (TRL) for training models on augmented datasets. Trained models can be uploaded to the Hugging Face Hub. 3. Inference: Generates predictions using trained or untrained language models with the augmented datasets. 4. Evaluation: Assesses the outputs from the inference module using various metrics such as EM, F1, ROUGE, and BERTScore. Metrics can be customized and applied either locally or globally.

RAG FOUNDRY framework have been found effective by augmenting and finetuning Llama-3 and Phi-3 models with diverse RAG configurations, showcasing consistent improvements across TriviaQA, ASQA and PubmedQA knowledge-intensive datasets.

Paper : https://arxiv.org/pdf/2408.02545